Since our focus at CrowdTamers is startup go to market, we talk a lot about launching.

But there’s something scary about your first launch: you’ve never shown anyone what you’re up to before and maybe you suck at it. Maybe not?

How do you know?

Most entrepreneurs aren’t master designers. Heck, I used to be a designer for a living and some of the first ad creatives I created back in the day looked like this.

Running your first campaign can be stressful because you’re going to spend hundreds of dollars (or more!) and maybe no one will like it.

A new client of mine, Hippoc, has developed a cool tool to help with that exact problem. It simulates the attention of thousands of people and tells you what will work and what won’t, based on AI dark sorcery.

I’ve run a few ad campaigns for them now, so let’s see how their forecasting compares to the actual results we achieved.

Campaign 1 – First targeting test

Our first test was just validating a key idea: can they make headway by offering Hippoc to designers or to marketers who use Canva who aren’t designers?

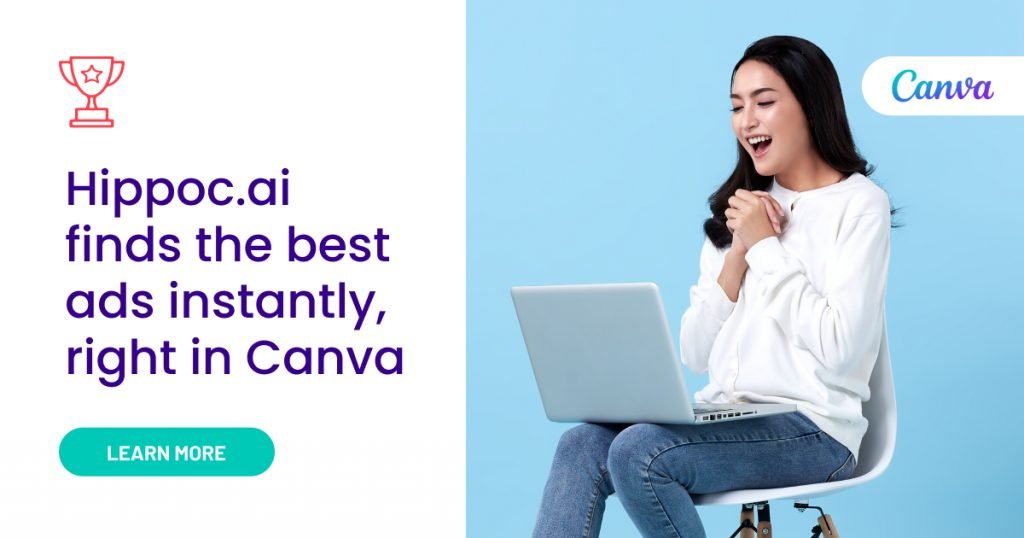

Here’s the ad creative that ran:

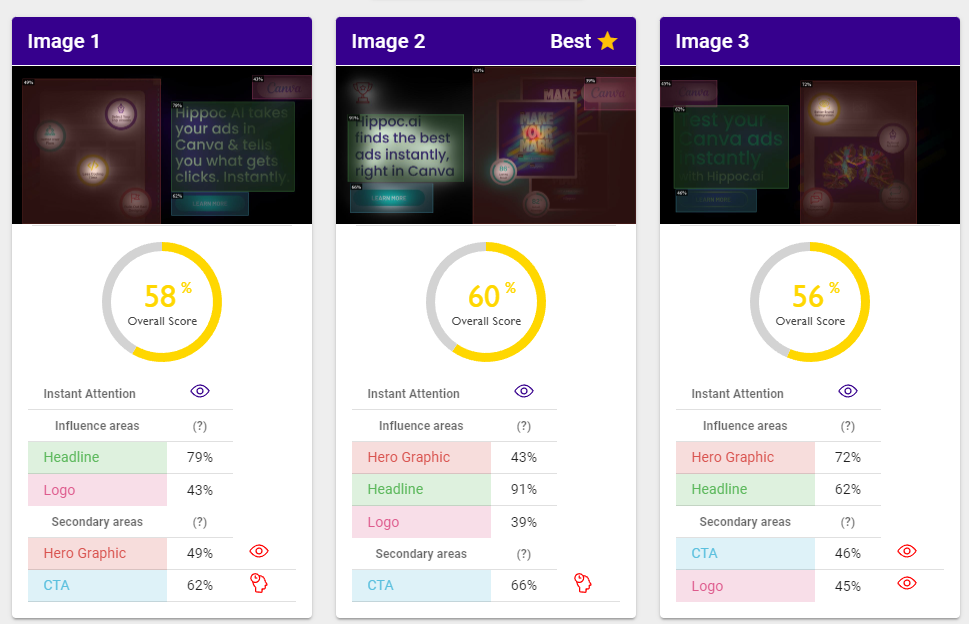

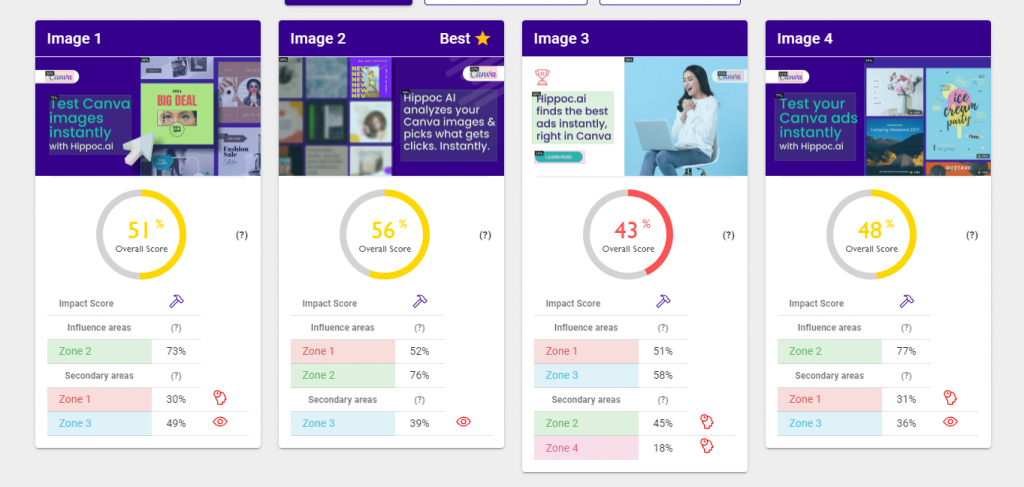

And then I asked Hippoc’s own AI which ad creatives it thought would draw the most attention and what parts of them were most interesting:

It’s worth noting that there are several ways that the AI looks at the ads—initial interest is just one of them. With that said, it looks like these ads are not hugely different, but that the middle ad should do best.

Facebook ER1 Ad Results

| Name | Visits | Impressions | Click Through Rate |

|---|---|---|---|

| Variant 1 | 4 | 6605 | .06% |

| Variant 2 | 7 | 5532 | .126% |

| Variant 3 | 11 | 8929 | .123% |

That’s exactly what the results were on Facebook. These Facebook ads were largely targeted to designers who used Canva. We also ran ads on Twitter but targeted them more broadly to US users who followed @Canva on Twitter. Performance here was…bad. Not even cracking two-tenths of one percent means that these ads were at about 10% of the performance I’d expect to see on a top-of-funnel ad campaign.

Twitter ER1 Ad Results

| Name | Visits | Impressions | Click Through Rate |

|---|---|---|---|

| Variant 1 | 187 | 22008 | .85% |

| Variant 2 | 118 | 17494 | .67% |

| Variant 3 | 104 | 14533 | .72% |

The overall performance here was much better—4x the performance of the ads on Facebook—but the champion here is the #2 pick from Hippoc. In any case, these ads still aren’t great—they’re well shy of what a good ad campaign should achieve.

Our conclusion about the CTR was that the non-designing audience was much more interested in impartial AI-based validation about their ad creatives than designers.

Hippoc’s Pre-test

- The ads won’t perform hugely differently

- The ads are, in general, weak.

- Ad #2 is best.

Real-World Result

- ✔

- ✔

- ❌-ish

So the ad campaigns that we ran cost a total of $296.97 and took 4 days. And most everything that we learned about the ad creatives themselves, we could have learned for free in Hippoc in about 90 seconds.

That’s a pretty good value for time and money spent.

Let’s check the follow-up campaign as well.

Campaign 1a – Iterating on targeting test

Our 1a variant test was further validation on the hypothesis of “non-designers are more likely to want this tool”. I wanted to test a few truisms of marketing designing here: faces get more clicks. Buttons get more clicks. White backgrounds do better. Here are the images we tested:

The basic idea of the ads wasn’t hugely different, but we don’t want to make all-new ad creatives for a variant test anyway.

Hippoc’s pre-test already had some results that surprised me. I had put my thumb on the scale to have our designer Ram make a sure-fire winning image: one with emotion, a human face, a CTA, and a white background for the text.

And Hippoc hated it.

Stupid computer, I said. It doesn’t know anything.

Something worth noting here is that Hippoc isn’t grading your copy; it’s evaluating the overall impact and attractiveness of the visuals.

That means my pre-tests here aren’t perfect. A perfect test should be an identical copy with different layouts. When you test many different creatives + different copies, you don’t have as much clarity on the cause of varied CTRs.

When you’re iterating quickly you will eventually be able to backfill that data through other tests, and it lets you prevent impression fatigue if you have the same person seeing ads in your mix.

Facebook ER1a Ad Results

| Name | Visits | Impressions | Click Through Rate |

|---|---|---|---|

| Variant 1 | 54 | 7086 | .86% |

| Variant 2 | 47 | 6805 | .78% |

| Variant 3 | 50 | 9995 | .61% |

| Variant 4 | 43 | 6743 | .80% |

Now it’s a bit wide of the mark here on the top performer—and I believe I know why—but damn if it wasn’t right about the image I was convinced would be the champion.

I’m pretty sure that the copy on the winner “Test Canva images instantly” is simply more appealing than “Hippoc.ai analyzes your Canva images & picks what gets clicks. Instantly.”

Twitter ER1a Ad Results

We simplified our ad creatives on Twitter (only running Variants 1 and 3), but targeted this time more like we had on Facebook in ER1. In this manner, we’re seeing if Twitter is just a better ad platform than FB or if the targeting we’d had the last experiment made the difference.

| Name | Visits | Impressions | Click Through Rate |

|---|---|---|---|

| Variant 1 | 151 | 38341 | .39% |

| Variant 3 | 139 | 38273 | .34% |

Much closer, but overall ad performance here is much worse with targeting that explicitly includes graphic designers.

Hippoc’s Pre-test

- V1, 2 & 4 ads are similar

- Ad #3 is bad.

- Overall still aren’t great.

- Variant #2 is best

Real-World Result

- ✔

- ✔

- ✔

- ❌

Again Hippoc didn’t give us a perfect result as to what was best, but it did correctly group the 3 ad creatives that were pretty good closely together. It also correctly identified the losing variant which flew in the face of a lot of assumed wisdom that I’ve accrued from being in digital marketing for 20 years.

Hippoc is good for

- Pre-test overall creative performance

- Gut check on ad design impact

- Saving you money & preventing loser ad designs

Hippoc isn’t good for

- Validating ad targeting

- Checking copy quality

- Predicting outcomes in varied audiences

This was super cool to run and analyze. Thanks to Kevin at Hippoc for agreeing to a write-up that’s this transparent.

We’ll be continuing to run more campaigns with the Hippoc crew for a few more months. I’ll post another writeup after we’ve run a few more ad creatives through them. Admittedly, it looks like a great tool to have in your toolbox to pre-test design ideas before you spend money on ’em.

Questions? Hit me up @trevorlongino on Twitter or go find the Hippoc folks at @HippocN.